Excerpt from this Retail Tech Podcast research note.

What if your AI agent was always correct?

In the age of generative AI, the word hallucination has become something of a euphemism — a gentle way to describe when powerful AI systems make things up. In casual use, it's a tolerable side effect. In commerce, medicine, finance, or governance, it can be catastrophic.

As we race toward more capable AI agents — and eventually AGI — one question looms large:

Is it possible to build a zero-hallucination AI and more specifically for our industry, hallucination free shopping agents?

The answer reveals not only the technical boundaries of current systems, but also the deeper philosophical limitations of trying to create intelligence modeled on imperfect human knowledge.

Of course our ultimate goal should be having systems that are perfect and correct all the time. This said, and understanding the limits of reality and humans, another question can be if perfect AI systems are a valid, even important goal.

What Are AI Hallucinations

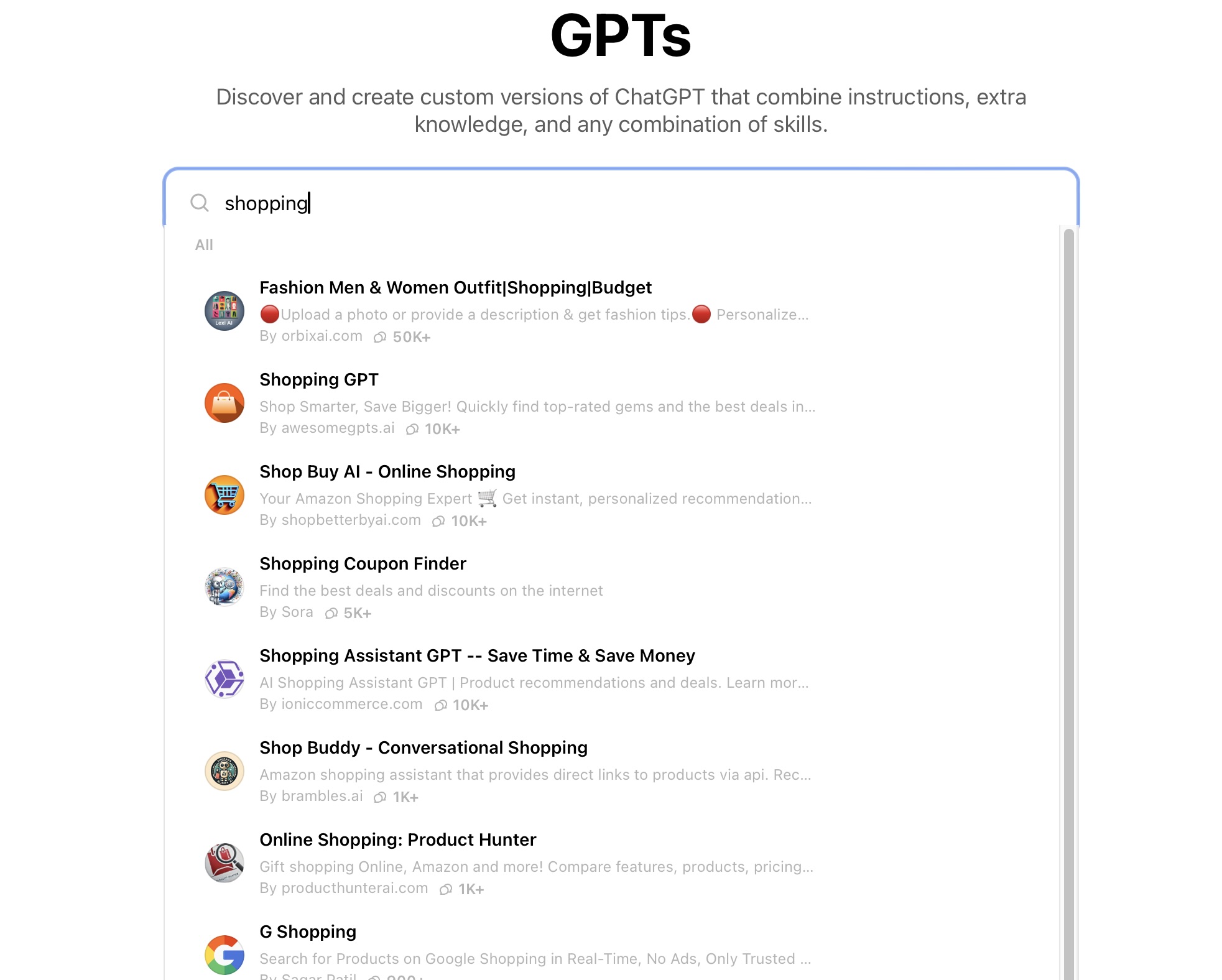

AI-powered shopping agents are quickly becoming a part of how people discover and buy products. Ask a question, and the system responds — conversationally, instantly, and often helpfully. The experience feels effortless: natural language in, relevant suggestions out. It’s no surprise that brands and retailers are eager to adopt them.

But as these agents become more visible and more polished, it’s worth pausing to ask: what’s actually happening under the hood? What kind of intelligence do these agents have — and just how far can we expect them to go?

The truth is, most shopping agents today are highly constrained — deliberately so. These systems are carefully engineered to avoid hallucinations, misinformation, and missteps. In a retail environment, getting something wrong — like product availability or return policy — can erode trust and create real operational costs. That’s why these agents are built around strict guardrails that define what they’re allowed to say, and how.

The Problem With Hallucinations in Commerce

If you’ve experimented with AI chatbots or digital assistants in ecommerce, you’ve likely seen this:

- Incorrect prices

- Imaginary products or inventory

- Made-up return policies

- Confident answers that aren’t grounded in any real data

These aren’t just bugs — they’re a known limitation of large language models (LLMs). These models are trained to predict the next word, not verify the truth.

when AI agents generate false, misleading, or invented information — can be quietly harmful or explosively damaging, depending on the context. Here's a detailed breakdown of how they affect both consumers and companies, in practical and reputational terms:

For Consumers

1. Misdirected Purchases

A shopper buys something based on false specs (e.g. “machine washable” or “compatible with X”), only to discover it isn’t. This leads to frustration, returns, and loss of trust.

In some verticals (health, parenting, home improvement), this could be dangerous — e.g. wrong usage instructions, dosage suggestions, or safety ratings.

2. Wasted Time

Agents hallucinating product availability, price, or location send users down dead ends.

Consumers may go to a store or click to buy only to find the item doesn’t exist or is out of stock.

3. Missed Opportunities

If the agent confidently recommends an inferior or irrelevant product, it can crowd out better options the user might have otherwise found.

4. Erosion of Trust

Even a few bad experiences with hallucinated content can lead users to distrust the entire assistant — and the brand behind it. The promise of convenience becomes a liability.

For Companies

1. Operational Cost

Hallucinations increase return rates, customer service volume, and re-engagement costs.

Even minor mistakes (“this ships in 2 days” when it’s actually 5) generate support tickets and churn.

2. Reputational Damage

If a brand’s AI agent misrepresents pricing, availability, or return policies, it can spark public backlash — especially if users feel deceived.

Social media screenshots of "dumb" or "lying" bots spread fast and carry long-term brand consequences.

3. Legal Risks.... read the rest on Retail Tech Podcast.