Why Identity Verification Is Obsolete—and Narrative Function Is the Only Signal That Matters

Executive Summary

Influence analysis has long relied on identity-based signals—authorship, biography, institutional affiliation, and publication history—to assess credibility and agency. These signals once correlated meaningfully with accountability and risk. They no longer do. In an information environment shaped by coordinated narrative operations, AI-assisted authorship, and institutional content pipelines, identity artifacts have become cheap to produce, easy to maintain, and increasingly detached from independent moral agency.

This paper argues that the resulting analytical failure is not subtle. Analysts, platforms, and now agentic AI systems routinely misclassify narrative outputs, over-trusting surface legitimacy while failing to recognize systemic function. The problem compounds over time: narratives that appear independent repeatedly converge on the same constraints, the same cautions, and the same limits of escalation, yet remain insulated from scrutiny because no single identity signal appears fraudulent.

To address this failure, the paper proposes a shift in analytical framing. Instead of treating individuals as sovereign authors whose credibility can be verified, analysts must treat narrative outputs as products of narrative nodes: functional entities defined by what their output does, when it appears, how it behaves under pressure, and whose interests it consistently serves. In the agentic future—where autonomous systems will ingest, rank, and act on narrative content—this shift is no longer optional. It is a prerequisite for detecting influence at all.

1. Problem Statement

Modern influence analysis still relies on outdated signals of authenticity—identity, biography, authorship, institutional affiliation—to assess credibility and agency. These signals are deeply embedded in analytical workflows, editorial judgment, platform governance, and increasingly, AI training pipelines.

In an environment shaped by coordinated narrative operations, AI-assisted authorship, and institutional content production, these signals no longer reliably distinguish independent moral actors from managed or instrumental personas. Yet they continue to be treated as if they do.

The consequence is not a single error, but a pattern of misclassification. Analysts encounter voices that appear legitimate, well-reasoned, and institutionally endorsed. Each signal checks out. Nothing is provably false. And yet, over time, the outputs exhibit a consistent functional alignment: the same boundaries of acceptable critique, the same reluctance to escalate after rupture events, the same framing that absorbs pressure rather than increasing it.

This creates a structural blind spot. Discourse is evaluated based on who appears to be speaking rather than what the output does, when it appears, and whose interests it reliably serves. Analysts may sense the pattern intuitively, but lack a framework to name it. Agentic systems, trained on legacy heuristics, do not sense it at all.

Without a functional model of narrative actors, influence systems become harder to detect, easier to scale, and more resilient to scrutiny—precisely as autonomous agents begin to consume, amplify, and act on narrative content.

2. Background and Context

2.1 Identity as a Proxy for Agency

For much of the modern information era, identity functioned as a reasonable proxy for agency. Authorship implied accountability. Institutional affiliation implied vetting. Consistency implied continuity of belief. These assumptions were imperfect, but they worked often enough to support analytical judgment.

Crucially, fabrication was costly. Maintaining a persona required sustained effort, visible coordination, and exposure to reputational risk. When deception occurred, it tended to collapse under scrutiny. Identity, while never definitive, served as a practical bottleneck.

Analytical habits formed under these conditions persist today. Analysts are trained to ask who is speaking, where they are published, and how their biography aligns with their claims. These questions feel rigorous because they once were.

2.2 The Collapse of the Identity–Agency Link

The environment that made those heuristics viable no longer exists.

AI-assisted drafting has reduced the cost of stylistic consistency to near zero. Institutional content pipelines allow outputs to be generated, edited, and sustained without any single author bearing responsibility. Coordinated narrative operations distribute labor across humans, machines, and organizations in ways that leave no clear seam to interrogate.

Continuity no longer implies individuality. Publication no longer implies independence. Identity no longer implies risk.

What remains is an analytical apparatus optimized for a world that has already disappeared.

3. The False Comfort of Identity Artifacts

Influence analysis still relies on a familiar set of authenticity signals:

-

Consistent publication history over time

-

Bylines across multiple platforms

-

Editorial interaction and curation

-

Traceable professional footprints

-

Linguistic consistency suggesting a single author

Each of these signals appears reasonable in isolation. Together, they create a powerful illusion of credibility.

That illusion persists because the signals themselves have not vanished. They have simply been hollowed out.

Editorial platforms can be ideologically aligned, compromised, or structurally inattentive. Personas can be ghostwritten, committee-maintained, or institutionally sustained for years without any single agent exercising moral discretion. One individual can operate multiple identities; multiple individuals can operate one. AI-assisted drafting smooths style and tone across time and context, eliminating the friction that once revealed human strain.

Institutional legitimacy, once earned slowly, can now be borrowed cheaply.

The continued reliance on these signals is not a failure of intelligence. It is institutional inertia: an analytical system continuing to trust indicators long after their correlation with agency has collapsed.

4. The Misframed Question: “Is This Person Real?”

When confronted with narrative outputs that feel constrained, evasive, or strangely insulated from consequence, analysts often ask a seemingly natural question: Is this person even real?

The instinct is not conspiratorial. It is adaptive. It reflects a recognition that something in the output does not align with expectations of independent moral agency.

The problem is that the question no longer points to the answer.

Fully fabricated personas are rare in contemporary influence environments—not because they are difficult to create, but because they are inefficient and risky. Fabricated identities collapse under scrutiny. They cannot network reliably. They cannot be defended by institutions without reputational cost.

As a result, modern influence systems overwhelmingly prefer real individuals with minimal public biography, operating in opinion or analysis space rather than operational roles, producing content that is plausible, moderate, and deniable. Real people provide cover. They absorb scrutiny. They fail quietly.

The objective is not deception through fiction. It is containment through credibility.

As long as analysts remain trapped in the real-versus-fake binary, they will continue to miss the actual structure of the system they are observing.

5. Analytical Reframing: From Ontology to Function

Once identity artifacts are recognized as unreliable, analysis must pivot from ontology—who is this?—to function—what does this output do?

Attempts to repair identity-based analysis typically fail in predictable ways. Analysts dig deeper into biographies. They scrutinize tone more closely. They search for ideological inconsistencies or hidden affiliations. Each effort promises clarity and delivers diminishing returns.

The problem is not insufficient scrutiny. It is misplaced scrutiny.

When identity becomes cheap and continuity becomes programmable, no amount of biographical resolution restores agency as a stable signal. What remains observable, and difficult to fake at scale, is behavior over time—especially under pressure.

Functional Indicators That Matter

Irreversibility of Positions

Managed narrative actors consistently avoid positions that would permanently alienate power centers or require costly follow-through. Independent agents eventually burn bridges.

Risk Absorption

Narrative functionaries incur no personal, professional, or physical risk, regardless of error. Authentic actors eventually pay a price.

Moral Asymmetry Under Pressure

During rupture events—mass violence, legitimacy collapse—system-aligned voices introduce nuance, caution, and delay precisely when clarity would escalate pressure.

Absence of Rupture Recognition

System-preserving outputs treat catastrophe as one variable among many. The model never rebases. Nothing is allowed to “change everything.”

These patterns are difficult to fake accidentally and trivial to reproduce deliberately. They are functional signals, not identity claims.

6. The Narrative Node Model

The concept of a narrative node emerges not from theory, but from repeated analytical dead ends. When different authors, across different platforms, with different biographies, produce functionally indistinguishable outputs under stress, the shared variable is no longer identity. It is role.

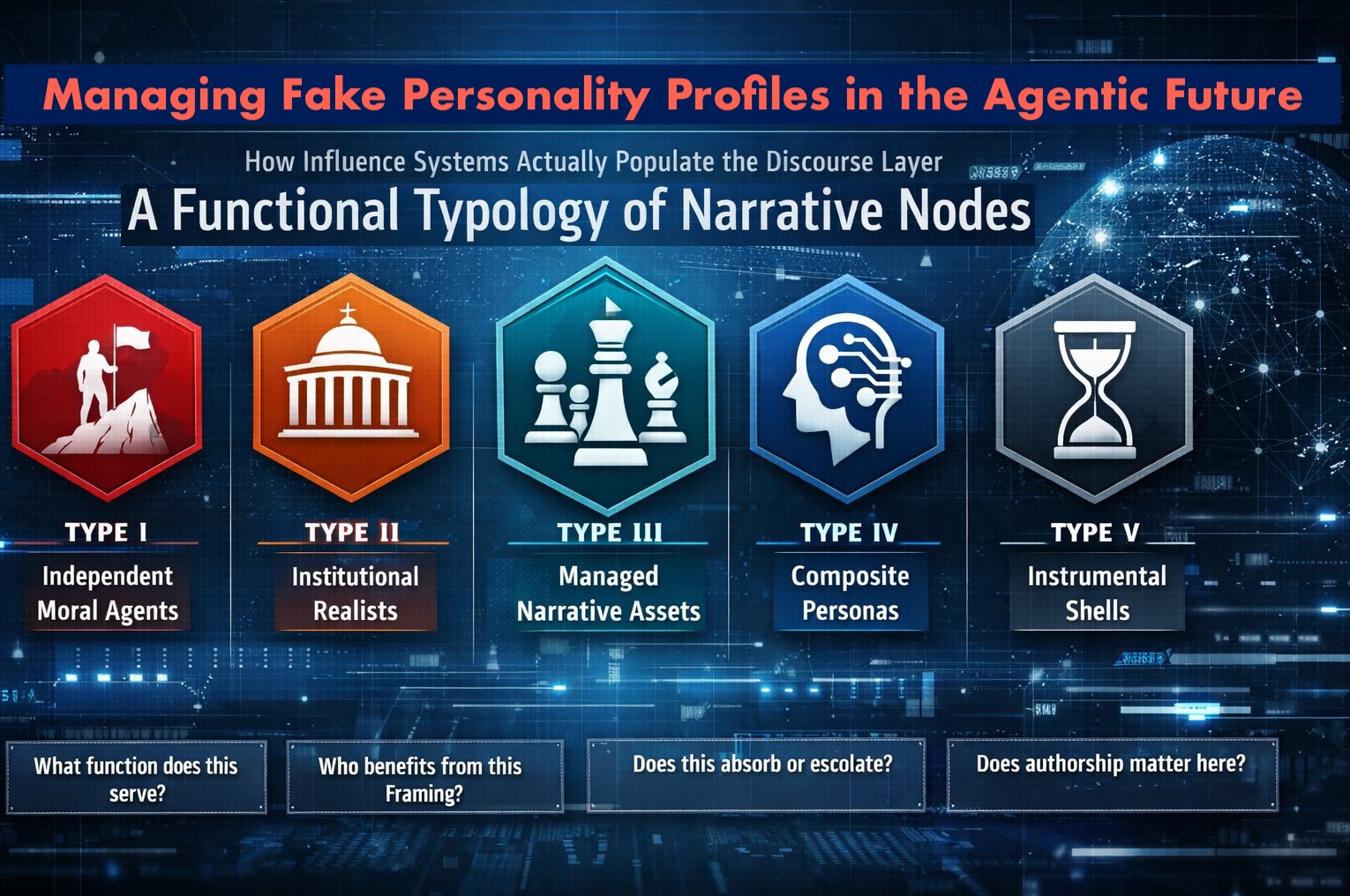

In the agentic future, individuals must therefore be understood as narrative nodes rather than sovereign authors. A node may consist of:

-

One human

-

Multiple humans

-

Human–AI collaboration

-

Institutional sustainment

-

Entirely instrumental structures

The internal composition of the node is analytically irrelevant if the output function remains consistent.

This reframing dissolves the false debate over existence and redirects attention to systemic impact. The analyst is no longer tracking people, but positions within an influence system.

7. Analysis takeaways

There is no requirement to prove that a persona is fabricated in order to analyze it critically. Identity artifacts—bylines, platforms, bios, stylistic continuity—no longer establish independent moral agency.

The decisive variables are output behavior, timing, framing, beneficiaries, and exclusions.

In modern influence ecosystems, function supersedes identity.

This shift is no longer theoretical. As agentic systems begin to ingest, rank, and act on narrative content at scale, misclassification ceases to be an academic error and becomes an operational one. Systems trained to trust identity will reliably amplify containment. Systems trained to recognize function retain the ability to detect agency.

The critical distinction in the agentic future is not whether a person exists, but whether a narrative node operates as an independent moral agent or as a system-maintained functionary.

That distinction—not ontology—is where real analytical leverage now lives.

8. Potential Paths Forward

The purpose of this paper is diagnostic rather than prescriptive. However, abandoning identity-based analysis without proposing alternative analytical paths risks leaving analysts and agentic systems directionless.

What follows are not solutions in the engineering sense, but two viable analytical trajectories that preserve rigor while acknowledging uncertainty.

8.1 Functional Classification Over Identity Verification

The first path replaces identity verification with explicit functional classification.

Rather than asking whether a narrative actor is real, independent, or authentic, analysts and systems would classify outputs based on observable behavioral patterns over time, including:

-

Response to rupture events

-

Willingness to incur irreversible cost

-

Consistency of framing under pressure

-

Risk distribution between author and audience

-

Alignment between stated concern and practical constraint

Under this model, credibility is not binary. Narrative nodes are not labeled as malicious or benign. Instead, they are situated along functional dimensions—such as escalatory vs absorptive, risk-bearing vs risk-shielded, or adaptive vs containment-oriented.

This approach has two advantages. First, it does not require attribution, intent inference, or exposure. Second, it scales naturally to agentic systems, which already operate on pattern recognition rather than moral judgment.

The cost of this approach is discomfort: functional classification does not offer the emotional clarity of calling something “fake.” Its benefit is analytical traction in environments where fakery is no longer the dominant failure mode.

8.2 Rupture-Centered Analysis as a Stress Test

The second path treats rupture events as the primary analytical lens.

Most narrative outputs appear reasonable during periods of stability. It is under rupture—mass violence, legitimacy collapse, irreversible political thresholds—that functional roles become visible. At these moments, narrative nodes are forced to choose between escalation and containment, clarity and delay, moral rebaselining and continuity.

A rupture-centered approach would explicitly deprioritize steady-state analysis and instead ask:

-

How does this narrative behave when existing frames break?

-

Does it rebase its moral model, or preserve it?

-

Does it absorb uncertainty, or convert it into pressure?

-

Does it treat rupture as decisive, or as merely contextual?

These signals are difficult to manufacture accidentally and costly to suppress consistently. For agentic systems, rupture-centered weighting offers a way to distinguish agency from role without relying on identity, biography, or institutional legitimacy.

The risk of this approach is latency: rupture events are episodic. The benefit is clarity when it matters most.

Rupture Events - explanation

Rupture as the Moment Analysis Is Forced to Reveal Itself

Most narratives survive not because they are true, but because they are sufficient. During periods of stability, existing frames—political, moral, institutional—can absorb contradiction with relatively little strain. New information is contextualized, complexity is invoked, and ambiguity is treated as prudence. Under these conditions, independent actors and managed personas often appear indistinguishable. Moderation looks reasonable. Delay reads as responsibility.

Rupture events disrupt this equilibrium.

A rupture occurs when reality can no longer be plausibly contained by the narratives that preceded it. Incremental explanations fail. Calls for “more context” stop clarifying and begin to obscure. The moral baseline itself is challenged, and with it the legitimacy of continuing as before. At that point, analysis is no longer a matter of interpretation but of response.

What makes rupture analytically decisive is not severity alone, but irreversibility. Something has happened that cannot be undone, reframed away, or absorbed without cost. The question ceases to be what does this mean? and becomes what must change?

It is at this moment that narrative function becomes visible.

Independent moral agents tend to rebase their models under rupture. They acknowledge discontinuity. They accept that prior assumptions no longer hold and that escalation—of language, of accountability, of consequence—may be unavoidable. This shift is rarely comfortable and often costly.

Managed narrative actors behave differently. Faced with rupture, they introduce caution precisely when clarity would increase pressure. They emphasize complexity where moral compression demands resolution. They treat the rupture as one variable among many, preserving continuity not because it explains reality, but because continuity is their function.

These responses are difficult to disguise because rupture compresses time and reduces interpretive space. The range of defensible reactions narrows. Choices that once appeared equivalent diverge sharply in consequence. Containment becomes visible not as moderation, but as preservation.

For analysts and agentic systems alike, rupture events function as stress tests. They reveal which narratives are capable of change and which exist to prevent it. They expose alignment not through attribution or intent, but through behavior under constraint. In an environment where identity signals are cheap and continuity is programmable, rupture is one of the few remaining moments where function cannot easily hide.

Final note: these paths are not conclusive in the theatre of options, promise to completely eliminate influence operations, nor do they guarantee correct classification. They do, however, realign analysis with the only remaining stable variable in the system: function under pressure.